Knox Basics

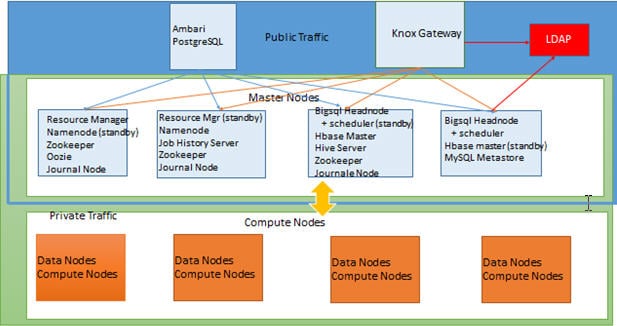

Knox Gateway is another Apache project that addresses the concern of secured access to the Hadoop cluster from corporate networks. Knox Gateway provides a single point-to-point of authentication and access for Apache Hadoop services in a cluster. Knox runs as a cluster of servers in the DMZ zone isolating the Hadoop cluster within the corporate network. The key feature of Knox Gateway is that it provides perimeter security for Hadoop REST APIs by limiting the network endpoints required to access a Hadoop cluster. Thus, it hides the internal Hadoop cluster topology from end users. Knox provides a single point for authentication and token verification at the perimeter of the Hadoop cluster.

Knox Gateways provides security for multiple Hadoop clusters, with these advantages:

- Simplifies access: Extends Hadoop’s REST/HTTP services by encapsulating Kerberos to within the Cluster.

- Enhances security: Exposes Hadoop’s REST/HTTP services without revealing network details, providing SSL out of the box.

- Centralized control: Enforces REST API security centrally, routing requests to multiple Hadoop clusters.

- Enterprise integration: Supports LDAP, Active Directory, SSO, SAML and other authentication systems.

In short, the knox works as a proxy by hiding the actual structure of the Hadoop cluster and providing a single point of access to all the services. For example, check the access methods to access webHDFS to get status of the directory /user using Knox gateway and without using Knox gateway.

Using Knox Gateway

# curl -k "http://nn1.localdomain:50070/webhdfs/v1/user?user.name=hdfs&op=GETFILESTATUS"

Without Using Knox gateway

# curl -k -u guest:guest-password "https://dn1.localdomain:8443/gateway/default/webhdfs/v1/user?op=LISTSTATUS"

here,

dn1.local domain – is the knox gateway host.

When I use knox gateway, nobody knows that I am using 50070 port internally. This way I can hide all the ports from the external world. I can also block all the port and just keep the knox gateway port (8443) open to a secure environment.

Knox supports only REST API calls for the following Hadoop services:

- WebHDFS

- WebHCat

- Oozie

- HBase

- Hive

- Yarn

As part of exam, we will install and configure the knox service and verify if we can access WebHDFS with knox gateway.

Install and Configure Knox

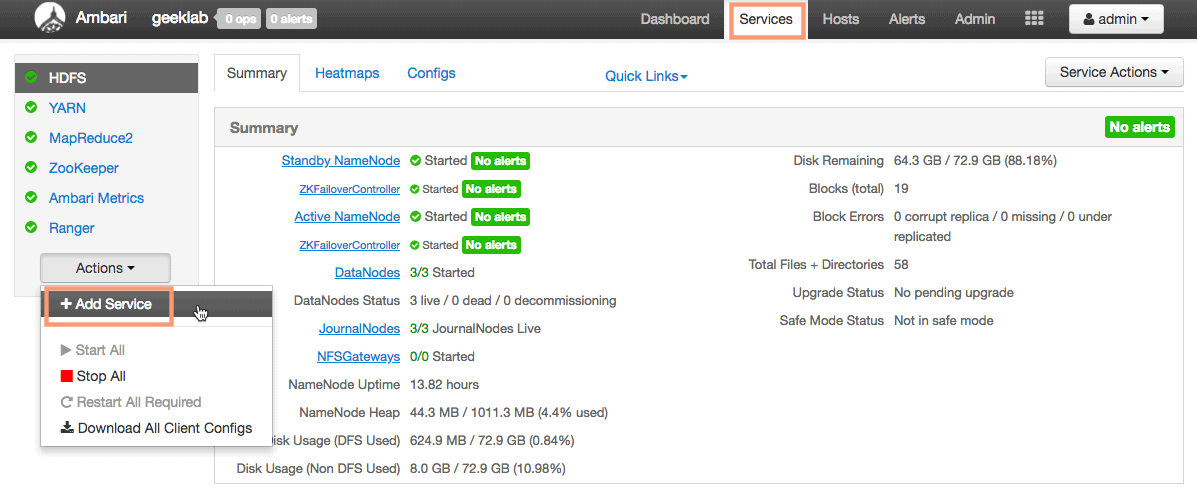

To add the Knox service using Ambari, goto services page and select “Add Service” from the “Actions” drop-down in the left sidebar.

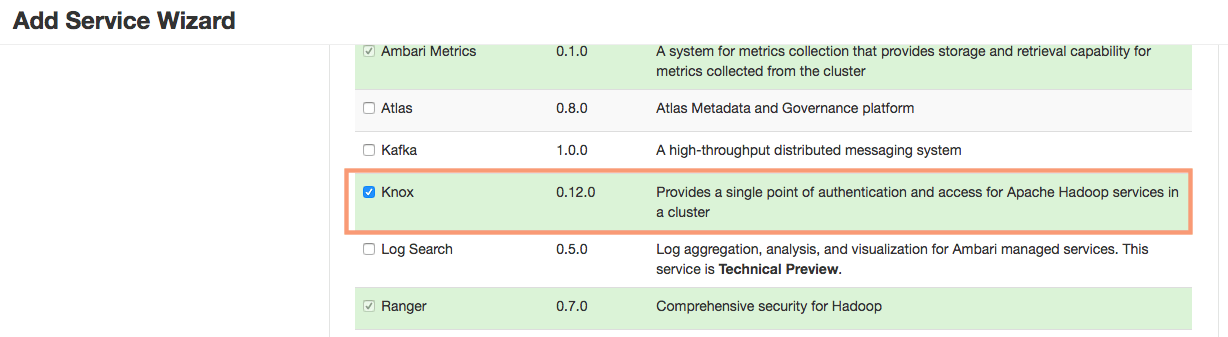

1. Add Service Wizard

Ambari will open up an “Add Service Wizard” to add the Knox service. Select the Knox service from the list of services and click next.

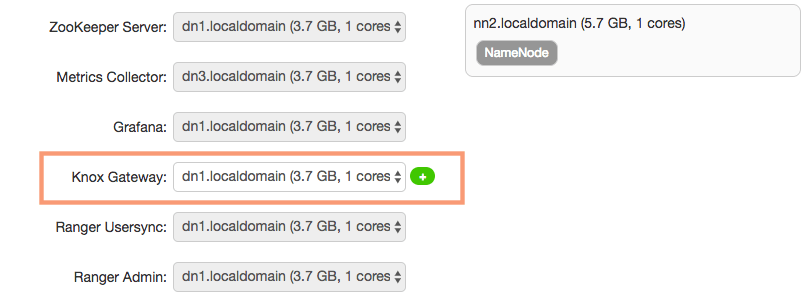

2. Knox Gateway

We need to choose the Knox gateway in this step. We will keep the default selection of dn1 host as the Knox Gateway.

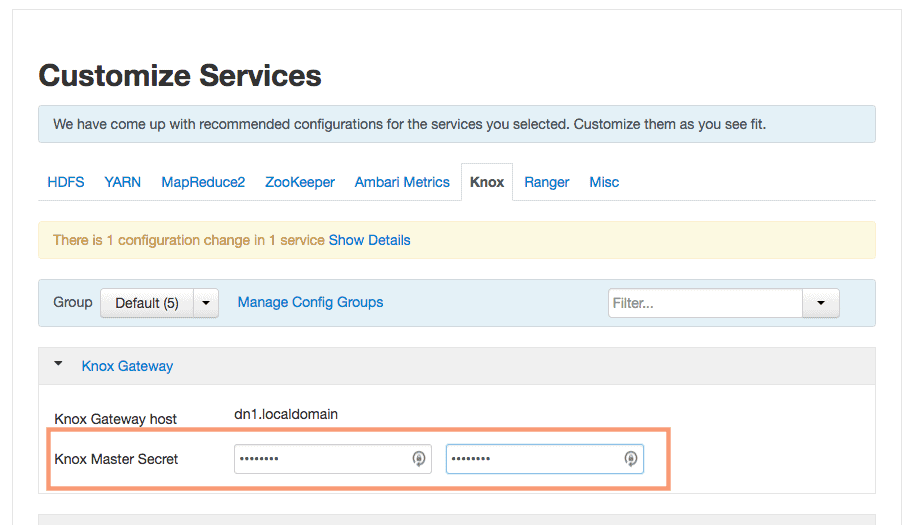

3. Customize Services

We need to provide the “Knox Master secrete” of our choice on this page. You can modify any configuration settings here if you want. I will keep the settings as default and proceed.

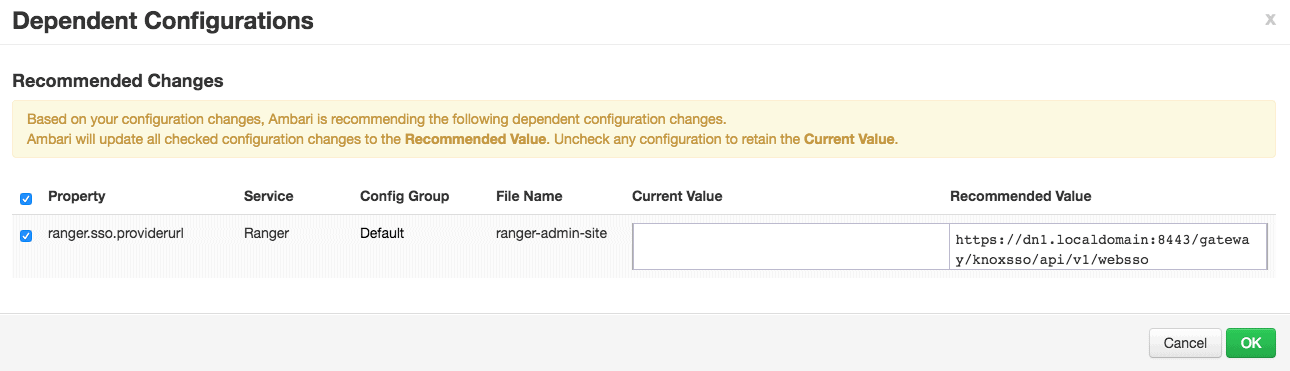

4. Dependent Configurations

Ambari will come up with some recommended changes in the configuration if any of the dependent service configurations requires. For me, it prompts for a recommended change in Ranger configuration. I will accept the recommended value and proceed.

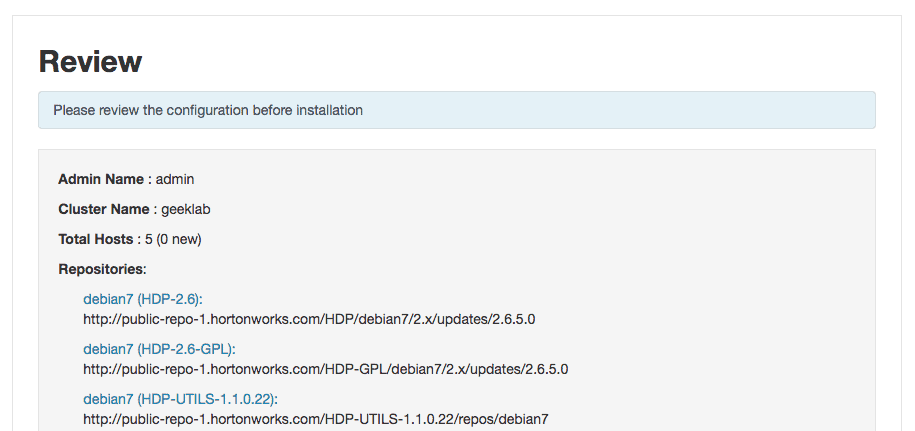

5. Review

You can review the configuration at this stage. No changes can be done after this.

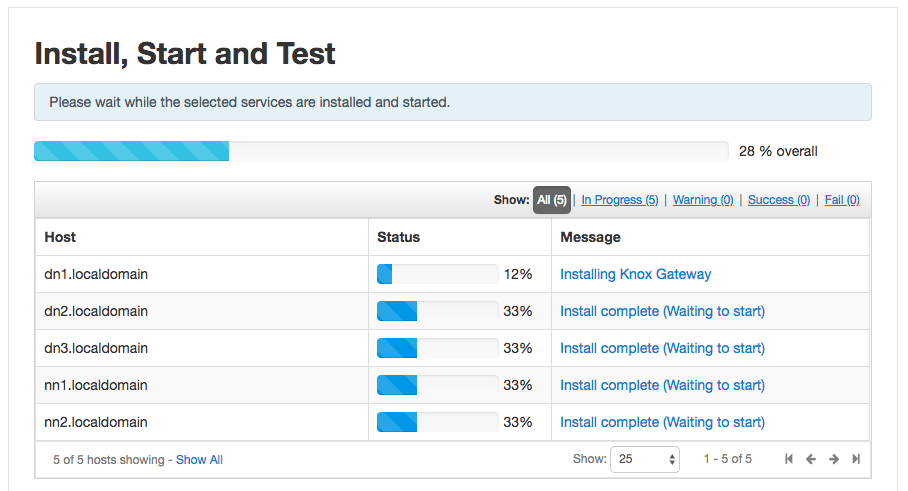

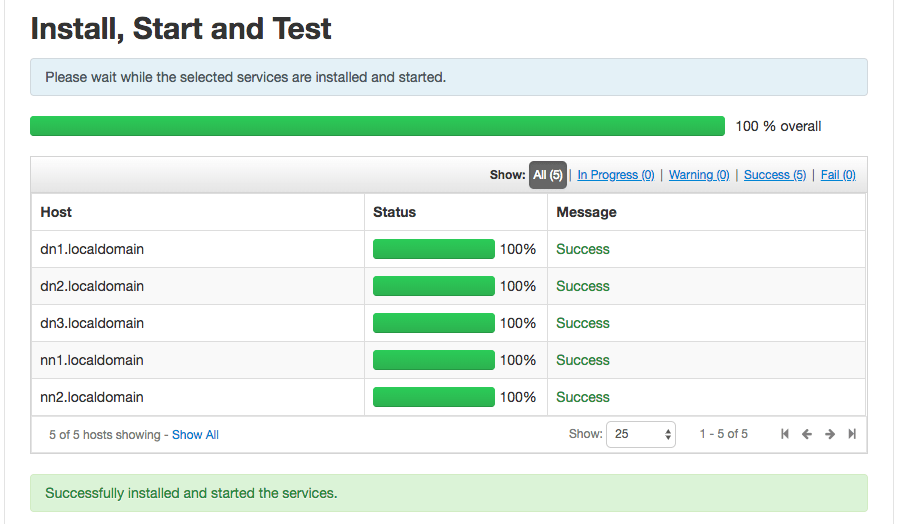

6. Install, Start and Test

Ambari will start installing the Knox service components on the required hosts. Ambari will also start the Knox service components and will test them as well.

If everything works as expected, you should see a successful installation of the Knox service.

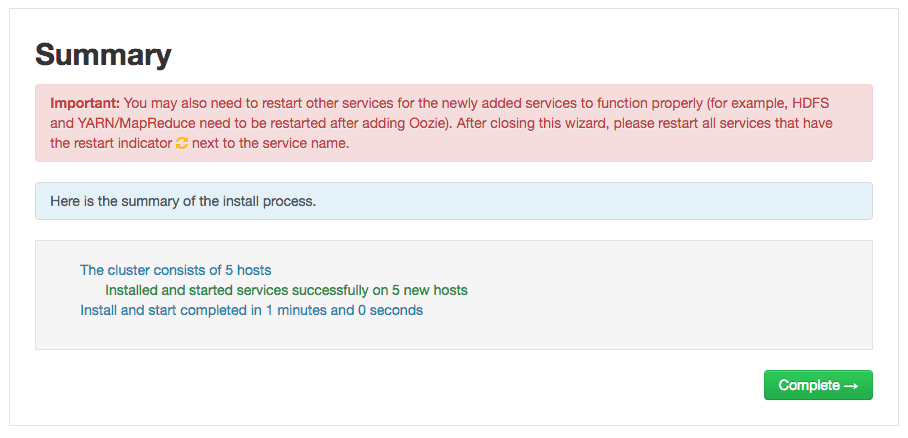

7. Summary

You can review the summary page of the installation process here.

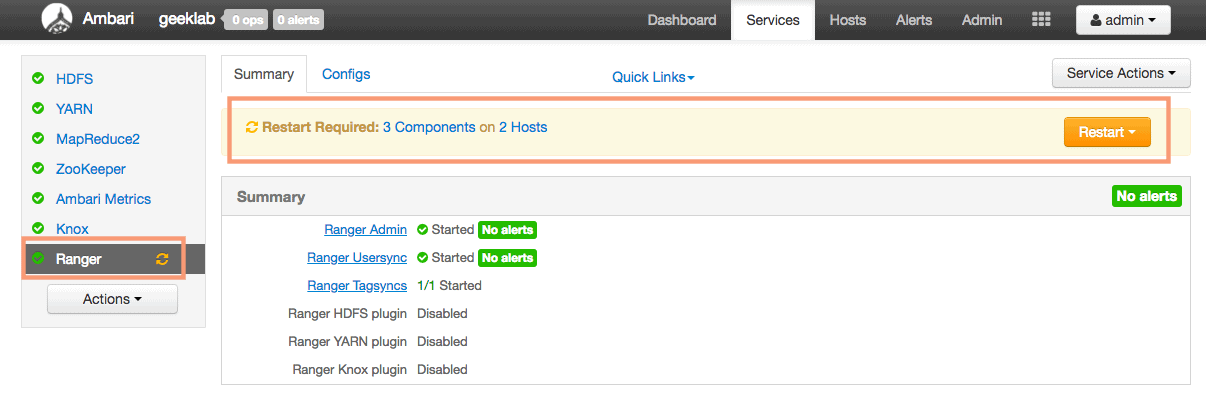

8. Restart of Services

As the ambari Dashboard suggests, we need to restart the Ranger service components. We will restart all the affected service components from ambari.

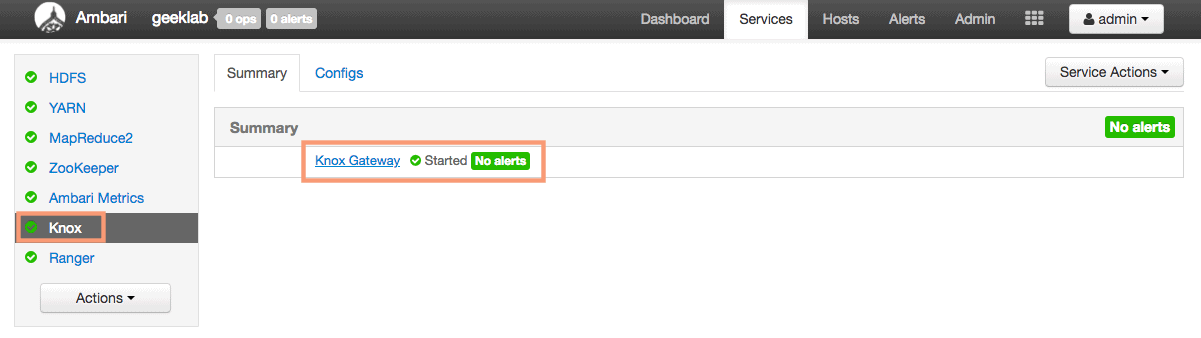

Verify the status of service

You can verify the status of the service in the “Services” page in ambari web UI. As shown below, the Knox service page shows that the “Knox Gateway” is started without any alerts.

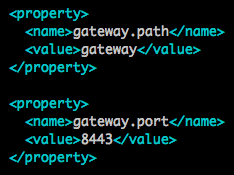

Default Knox Gateway port and path

The default gateway port for knox service is 8443 and the default gateway path is “gateway”. These properties are defined in the configuration file /etc/knox/conf/gateway-site.xml on the knox gateway host.

Verify Knox functionality

In production environments, you may have an external LDAP server for authentication. For the certification and testing purpose, we have a small Demo LDAP integrated with the Knox service.

1. Before starting the Demo LDAP, the configuration directory for Knox (/etc/knox/conf) will not have few configuration files present in it, like gateway-site.xml etc. Here is the content of the directory before starting Demo LDAP

$ ls -lrt /etc/knox/conf/ total 28 -rw-r--r--. 1 knox knox 2447 Jul 22 07:23 gateway-log4j.properties drwxr-xr-x. 2 knox knox 94 Jul 22 07:23 topologies -rw-r--r--. 1 knox knox 1814 Jul 22 07:23 ldap-log4j.properties -rw-r--r--. 1 knox knox 2765 Jul 22 07:23 users.ldif

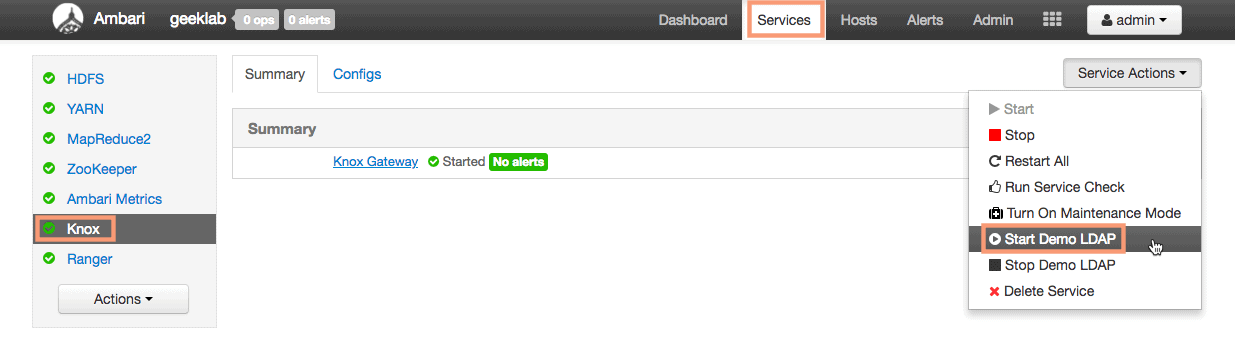

2. Lets start the knox Demo LDAP first. Goto Services > Knox and from the “Service Actions” drop-down start the demo LDAP.

3. If you now check the configuration directory, you will find all the configuration files are now present.

# ls -lrt /etc/knox/conf/ total 28 -rw-r--r--. 1 knox knox 1436 May 11 13:21 shell-log4j.properties -rw-r--r--. 1 knox knox 1485 May 11 13:21 knoxcli-log4j.properties -rw-r--r--. 1 knox knox 91 May 11 13:21 README -rw-r--r--. 1 knox knox 948 Jul 22 07:23 gateway-site.xml -rw-r--r--. 1 knox knox 2447 Jul 22 07:23 gateway-log4j.properties drwxr-xr-x. 2 knox knox 94 Jul 22 07:23 topologies -rw-r--r--. 1 knox knox 1814 Jul 22 07:23 ldap-log4j.properties -rw-r--r--. 1 knox knox 2765 Jul 22 07:23 users.ldif

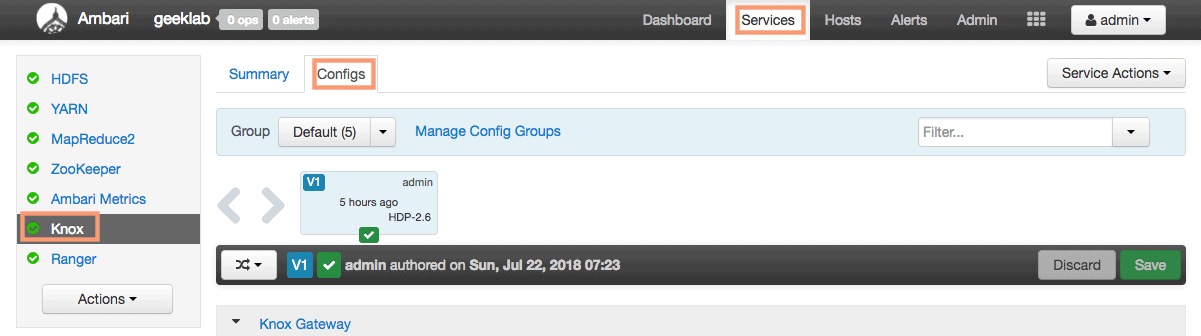

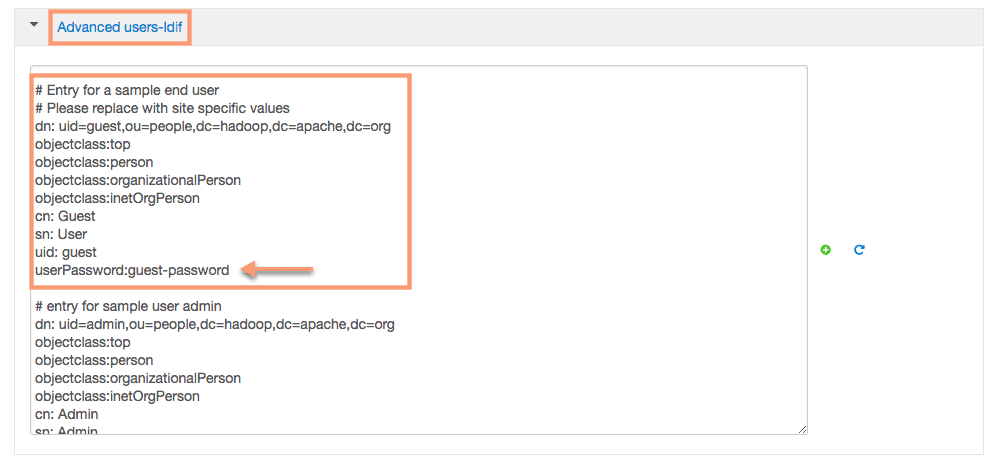

4. We can use the user from the demo LDAP setup under knox. To find the usernames from demo LDAP, you can either view the file /etc/knox/conf/users.ldif or from ambari goto Services > Knox > Config.

and view the property Advanced users-ldif in the config properties.

5. We will use the user guest with the password guest-password to validate the knox functionality. Let’s list out the contents of the directory /user using curl and webHDFS:

Invoke the LISTSTATUS operation on WebHDFS via the gateway

The syntax of the command used here is :

# curl -i -k -u [ldap user]:[ldap user's password] https://[knox gateway host]:[gateway port]/[gateway path][service]?[operation]

So here we have,

ldap user – guest

ldap user’s password – guest-password

knox gateway host – dn1.localdomain

gateway port – 8443

gateway path – /gateway/default

service – webHDFS ( webhdfs/v1/user)

operation to perform – LISTSTATUS

# curl -i -k -u guest:guest-password https://dn1.localdomain:8443/gateway/default/webhdfs/v1/user?op=LISTSTATUS

HTTP/1.1 200 OK

Date: Sun, 22 Jul 2018 09:43:50 GMT

Set-Cookie: JSESSIONID=hy8dpil2pvtksctrqzysggbs;Path=/gateway/default;Secure;HttpOnly

Expires: Thu, 01 Jan 1970 00:00:00 GMT

Set-Cookie: rememberMe=deleteMe; Path=/gateway/default; Max-Age=0; Expires=Sat, 21-Jul-2018 09:43:50 GMT

Cache-Control: no-cache

Expires: Sun, 22 Jul 2018 09:43:50 GMT

Date: Sun, 22 Jul 2018 09:43:50 GMT

Pragma: no-cache

Expires: Sun, 22 Jul 2018 09:43:50 GMT

Date: Sun, 22 Jul 2018 09:43:50 GMT

Pragma: no-cache

Content-Type: application/json; charset=UTF-8

X-FRAME-OPTIONS: SAMEORIGIN

Server: Jetty(6.1.26.hwx)

Content-Length: 957

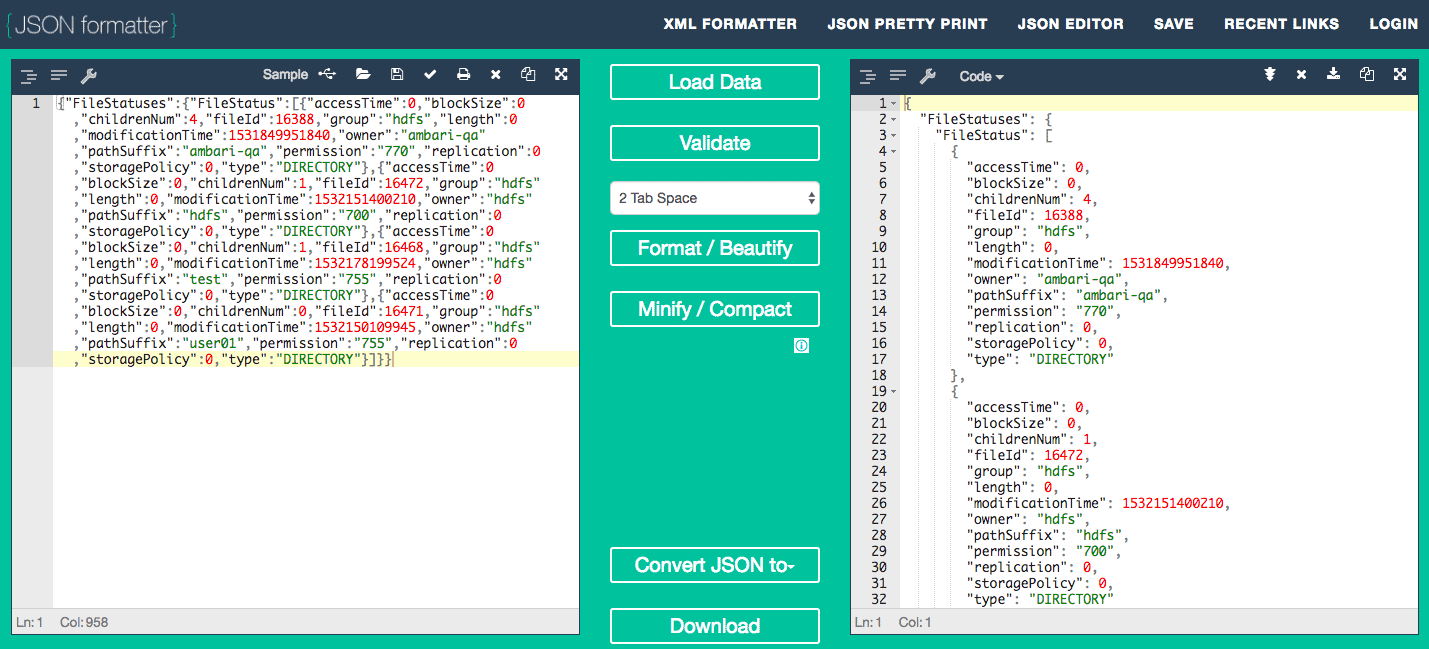

{"FileStatuses":{"FileStatus":[{"accessTime":0,"blockSize":0,"childrenNum":4,"fileId":16388,"group":"hdfs","length":0,"modificationTime":1531849951840,"owner":"ambari-qa","pathSuffix":"ambari-qa","permission":"770","replication":0,"storagePolicy":0,"type":"DIRECTORY"},{"accessTime":0,"blockSize":0,"childrenNum":1,"fileId":16472,"group":"hdfs","length":0,"modificationTime":1532151400210,"owner":"hdfs","pathSuffix":"hdfs","permission":"700","replication":0,"storagePolicy":0,"type":"DIRECTORY"},{"accessTime":0,"blockSize":0,"childrenNum":1,"fileId":16468,"group":"hdfs","length":0,"modificationTime":1532178199524,"owner":"hdfs","pathSuffix":"test","permission":"755","replication":0,"storagePolicy":0,"type":"DIRECTORY"},{"accessTime":0,"blockSize":0,"childrenNum":0,"fileId":16471,"group":"hdfs","length":0,"modificationTime":1532150109945,"owner":"hdfs","pathSuffix":"user01","permission":"755","replication":0,"storagePolicy":0,"type":"DIRECTORY"}]}}

Here we have listed the directories under /user of cluster node dn1 using curl and knox gateway. The result (highlighted in red) is the result in JSON format. You can use any JSON formatter online, to format this json output.

The output we have got is equivaled of executing the below command on dn1 cluster node.

$ hdfs dfs -ls /user Found 4 items drwxrwx--- - ambari-qa hdfs 0 2018-07-17 23:22 /user/ambari-qa drwx------ - hdfs hdfs 0 2018-07-21 11:06 /user/hdfs drwxr-xr-x - hdfs hdfs 0 2018-07-21 18:33 /user/test drwxr-xr-x - hdfs hdfs 0 2018-07-21 10:45 /user/user01